Sqoop Introduction

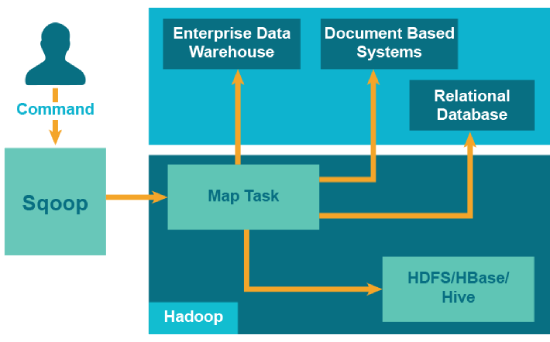

Apache Sqoop is a tool in Hadoop ecosystem which is designed to transfer data between HDFS (Hadoop storage) and relational database servers like mysql, Oracle RDB, SQLite, Teradata, Netezza, Postgres and so on.

Apache Sqoop imports data from relational databases to HDFS, and also exports data from HDFS to relational databases. It efficiently transfers bulk data between Hadoop and external datastores such as enterprise data warehouses, relational databases, etc.

This is how Sqoop got its name – “SQL to Hadoop & Hadoop to SQL”.

Additionally, Sqoop is used to import data from external datastores into Hadoop ecosystem’s tools like Hive & HBase.

What Shasta has Done

We are transferring bulk data between Hadoop and other relational databases. We use Sqoop to import data from relational databases such as MySQL, Oracle to Hadoop HDFS, and export from the Hadoop file system to relational databases. It provides for easy interaction between the relational database server and Hadoop’s HDFS. All records are stored as text data in text files or as binary data in Avro and Sequence files while exporting the data from HDFS to RDBMS. The files given as input to Sqoop contain records, which are called as rows in the table. Those are read and parsed into a set of records and delimited with user-specified delimiter.

Key Features of Sqoop

- Full Load: Apache Sqoop can load the whole table by a single command. One can also load all the tables from a database using a single command.

- Incremental Load: Apache Sqoop also provides the facility of incremental load where one can load parts of table whenever it is updated.

- Parallel import/export: Sqoop uses YARN framework to import and export the data, which provides fault tolerance on top of parallelism.

- Import results of SQL query: You can also import the result returned from an SQL query in HDFS.

- Compression: You can compress your data by using deflate(gzip) algorithm with –compress argument, or by specifying –compression-codec argument. You can also load compressed table in Apache Hive.

- Connectors for all major RDBMS Databases: Apache Sqoop provides connectors for multiple RDBMS databases, covering almost the entire circumference.

- Kerberos Security Integration: Kerberos is a computer network authentication protocol which works on the basis of ‘tickets’ to allow nodes communicating over a non-secure network to prove their identity to one another in a secure manner. Sqoop supports Kerberos authentication.

- Load data directly into HIVE/HBase: You can load data directly into Apache Hive for analysis and also dump your data in HBase, which is a NoSQL database.

- Support for Accumulo: You can also instruct Sqoop to import the table in Accumulo rather than a directory in HDFS.