What Is Hadoop?

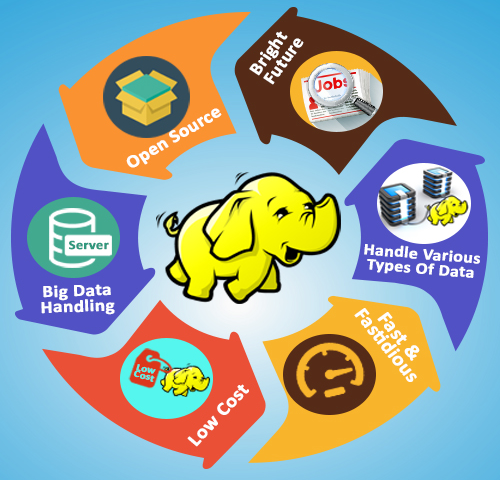

Apache Hadoop is an open-source software framework created in 2005, specifically engineered for creating and supporting big data and large scale processing applications – something that a traditional software isn’t able to do.

The whole Hadoop framework relies on 4 main modules that work together:

- Hadoop Common is like the SDK for the whole Hadoop framework, providing the necessary libraries and utilities needed by the other 3 modules.

- Hadoop Distributed Files System (HDFS) is the file system that stores all of the data at high bandwidth, in clusters (think RAID).

- Hadoop Yarn is the module that manages the computational resources, again in clusters, for application scheduling.

- Finally, Hadoop Mapreduce is the programming model for creating the large scale and big data applications.

Hadoop

- With the onset of huge mobile subscriptions and the prolific usage of the social media globally, there is a deluge of data in all forms, meaning structured, semi-structured and unstructured.

- Traditional storage that houses the relational data has given way to concurrent distributed data processing.

- Hadoop framework comes with a columnar structure and stores the data and metadata across nodes.

- The data is replicated across so that any failed node instance is taken care automatically.

- Hadoop platform is ideal for building statistical and forecasting models using machine learning and AI.

What Shasta has Done

- We are using Hadoop framework that allows for distributed processing for large datasets across clusters of computers.

- We are handling 10 cluster computing for Hadoop file system.

- Primary name node can store only the meta data of HDFS - the directory trees of all files and track the files across the cluster.

- Secondary NameNode in Hadoop is a specially dedicated node in HDFS cluster whose main function is to take checkpoints of the file system metadata present on Name Node. we are storing the data in the remaining 8 clusters.

- Map Reduce will do the parallel processing of large data sets and YARN will do cluster resource Management and job scheduling. We are storing a large volume of structured, semi-structured and unstructured data in the Hadoop file system. HDFS replicates the data across multiple nodes to increase the throughput.