What is HBase?

HBase is a column-oriented database management system that runs on top of Hadoop Distributed File System (HDFS). It is well suited for sparse data sets, which are common in many big data use cases.

Features

- Linear and modular scalability.

- Strictly consistent reads and writes.

- Automatic and configurable sharding of tables

- Automatic failover support between RegionServers.

- Convenient base classes for backing Hadoop MapReduce jobs with Apache HBase tables.

- Easy to use Java API for client access.

- Block cache and Bloom Filters for real-time queries.

- Query predicate push down via server side Filters

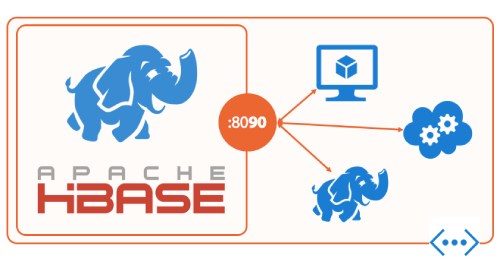

- Thrift gateway and a REST-ful Web service that supports XML, Protobuf, and binary data encoding options

- Extensible jruby-based (JIRB) shell

- Support for exporting metrics via the Hadoop metrics subsystem to files or Ganglia; or via JMX

Shasta Tek in HBase

Shasta Tek is well aware of the key differences between Cassandra and HBase in terms of their strengths and weaknesses. Though HBase requires more elaborate ecosystem to support in terms of configuration, security, and availability, there are application areas which justify the choice of HBase as the storage medium.

For example, by the way, both Cassandra and HBase organize their data models, they both are really good with time-series data: sensor readings in IoT systems, website visits, and customer behavior, stock exchange data, etc. They both store and read such values nicely. Besides that, scalability is the property they both have: Cassandra – linear, HBase – linear and modular ones.

However, when it comes to scanning huge volumes of data to find a small number of results, due to having no data duplications, HBase is better. For instance, this reason applies to HBase’s ability to handle text analysis (based on web pages, social network posts, dictionaries and so on). Plus, HBase can do well with data management platforms and basic data analysis (counting, summing and such; due to its coprocessors in Java).

Shasta uses HBase for one of their projects, in which the customer has to scrape the Skills and demographic information of applicant candidates from an unstructured format of CVs.