Apache Kafka® is a distributed streaming platform. What exactly does that mean?

A streaming platform has three key capabilities:

- Publish and subscribe to streams of records, similar to a message queue or enterprise messaging system.

- Store streams of records in a fault-tolerant durable way.

- Process streams of records as they occur.

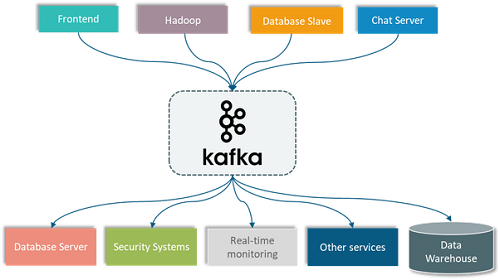

Kafka is generally used for two broad classes of applications:

- Building real-time streaming data pipelines that reliably get data between systems or applications.

- Building real-time streaming applications that transform or react to the streams of data.

First a few concepts:

- Kafka is run as a cluster on one or more servers that can span multiple datacenters.

- The Kafka cluster stores streams of records in categories called topics.

- Each record consists of a key, a value, and a timestamp.

What Kafka Does

Apache Kafka is a fast, scalable, durable, and fault-tolerant publish-subscribe messaging system.

Apache Kafka supports a wide range of use cases as a general-purpose messaging system for scenarios where high throughput, reliable delivery, and horizontal scalability are important. Apache Cassandra and Apache Spark both work very well in combination with Kafka.

Kafka use cases

- Stream Processing

- Website Activity Tracking

- Metrics Collection and Monitoring

- Log Aggregation

Kafka characteristics

- Scalability : Distributed system scales easily with no downtime

- Durability : Persists messages on disk, and provides intra-cluster replication

- Reliability : Replicates data, supports multiple subscribers, and automatically balances consumers in case of failure

- Performance : High throughput for both publishing and subscribing, with disk structures that provide constant performance even with many terabytes of stored messages.

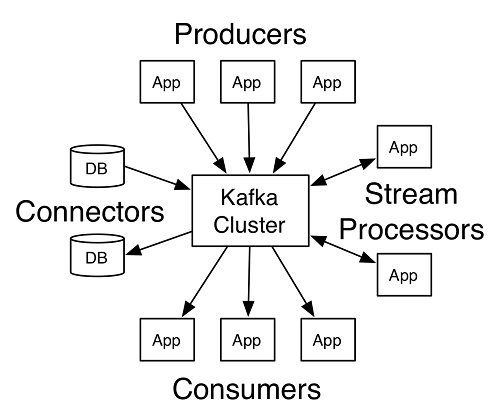

Kafka has four core APIs:

- The Producer API allows an application to publish a stream of records to one or more Kafka topics.

- The Consumer API allows an application to subscribe to one or more topics and process the stream of records produced to them.

- The Streams API allows an application to act as a stream processor, consuming an input stream from one or more topics and producing an output stream to one or more output topics, effectively transforming the input streams to output streams.

- The Connector API allows building and running reusable producers or consumers that connect Kafka topics to existing applications or data systems. For example, a connector to a relational database might capture every change to a table.